REFLECTIONS

Sonic Signals and Symbolic “Musical Space”*

Wolfgang Ernst

Sound Stage Screen, Vol. 3, Issue 2 (Fall 2023), pp. 107–29, ISSN 2784-8949. This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License. © 2024 Wolfgang Ernst. DOI: https://doi.org/10.54103/sss24027.

Part I. A Media-Archaeological Understanding

In mediated soundscapes, where technology determines the musical situation, Spaces of Musical Productionand the Production of Musical Spaces are always already entangled. A media-archaeological understanding of this entanglement deals with its core media-theatrical “scenes” (in both the spatial and dramatic sense of the term “scene”). The notions of “sound” and “stage” may therefore be extended from their literal meaning to a deeper epistemological dimension: to implicit sonicity, that is, to all forms of oscillating signals as a multitude of time-critical periodic events—even beyond the mechanics of “vibrational force” [1] —and to media theatre as it unfolds within technology as its primary micro-scene.

Once the notion of “sound” is extended from the audible to a more fundamental layer—its physical essence—it is in structural affinity with electronic media processes. In academic terms, this results in an alliance between sound studies and media science. Actually sounding “music” is equivalent to media in technical being. The composition of music, on the other hand, is closer to computational algorithms than to three-dimensional “space.” When unfolding in space as sound, the symbolical regime of musical composition is rather entangled with the tempoReal than with empirical “space.”

Sound in Time vs. Musical Space

Let us therefore pay attention: the name of the journal that is co-organizing this event refers to “sound” rather than to “music.” Yet for analytic purposes, it is mandatory to differentiate between physical acoustics, harmonized sound, and conceptual music. [2]

The title of this conference, with its inversive theme, is not just a game with words. Once the sites of musical production are no longer directly coupled to the human mind and its instrumental or vocal extensions but escalate into autonomous musical technologies, “spaces of musical production” and “the production of musical space,” below the apparent social environment, appear to be suddenly “grounded” within the machine and as such call for a media-archaeological analysis of their micro-spatial circuitry. With respect to computationally processed sound, finally, “musical space” becomes a mere metaphor, and rather a direct function of the “algorhythm” itself. [3]

While perspectively constructed “space,” in the visual regime, endures, a sounding event is ephemeral by its very essence as a time signal. Sound takes place in space onlyas sonic time. Categories like Euclidean space and “musical” harmony relate to the abstract symbolic order of knowledge and intelligence, while dynamic spatio-temporal fields, as the “real” of music, are implicitly “sonic.” [4] Sonic signals are not simply time-based but actually time-basing. Implicitly “sonic” electromagnetic wave transmission does not even implicate space since it propagates self-inductively. “Music,” on the other hand, is the conceptual term for symbolically ordered sound. Music is “spatial” only in the geometric and diagrammatic sense.

“What does music have to do with sound?!”, composer Charles Ives once provocatively asked. [5] Let us, therefore, differentiate between, respectively: (a) room-acoustic space which is already anthropocentric, since the “acoustic” is usually defined by the range of human hearing; (b) sonic time which is the sound signal in a more fundamental, “acoustemic” sense, [6] media-archaeologically focusing on its actual physical message as a time signal ; and (c) the musical diagram. With its symbolic clocking and rhythmization, made familiar by the Morse communication code, sonic signal propagation becomes “musical” when its temporality is dramatically structured and time-critically organised (both in pitch or duration and in phase).

While the architectural framing of acoustics on “stage” is spatial (such as one observes in theatres, opera houses and concert venues), within electronic apparatuses and digital devices the sonic event itself is a function of temporal production. Any “musical” composition is a geometrization of the genuinely temporal fabrics of sound, where the signal is a function of time. Musical notation (and its technological equivalent in “digitization” as much as any musical score “archive”) is a mere symbolization of sono-temporal patterns. But for the acoustic signal itself—once “in being”—there is no “space” to cross or permeate but rather genuine time properties such as delay, resonance, or reverb.

Stereophonic recording was devised to reproduce the spatial sensation of sound which had been lacking in monophonic early phonography. In stereophony, two channels correspond to binaural perception. But it is only in the human brain that the spatial impression, as with the nineteenth-century optical stereoscope, is at last “calculated” (von Foerster), not the sensory organs. Phenomenologically, musical “space” is not an objective quality, but primarily a cognitive abstraction. A “schizophonic” [7] dissonanceoccurs: The cognitive mind is tentatively perceiving and processing acoustic sensations musically, while the physical body reacts to sound in an affective way.

The optical construction of space in the form of visual perspective differs from its time-critical construction in the auditory channel which actually generates a spatial sensation by calculating the run time differences of acoustic signals. This results in a merely “virtual” impression of musical space (in the sense of entirely computed objects). In fact both binaural perception and the two-channel stereo impression are based on time-critical signal differences, just like bats orientate themselves ultra-sonically not in, but via sound as (a substitute for) space.

Media artist Nam June Paik, in his 1961 design of a Symphony for 20Rooms, allowed for the audience to move between spaces and make a random choice of sound sources, thereby allowing for a so-called “space-music” to unfold. [8] But such a media-based theatricalization is still a conventional stage for musical action to unfold. Paik himself later switched to discovering and unfolding sound scapes (from) within electro-acoustic devices themselves. Sound, here, is no dramaturgical supplement but rather becomes a genuinely non-spatial event.

It is only with echolocation that the relationship between sound and space becomes media-active. Through the radar, and the sonograph, “space” became a direct product(ion) of time-critical signal (re-)transmission. In auditory (rather than visual) terms, the production of “space” is in fact a temporal unfolding between the present moment (t) and its time-critical calculation f(t).

As it has been exemplified in Edmund Husserl’s phenomenology, there is a dynamic interplay between the ongoing short-time memory retention and protension as a cognitive condition for the musically encoded harmonic melody. In psycho-acoustic terms, the brain time-critically synthesizes an impression of “space” from binaural information in a rather operative sense. This is “computational space.” [9]

There Is No “Musical Space”: Understanding the Musical Situation with Günther Anders

Voluminosity rather than geometrical “space” is the existential mode of acoustic events, according to philosopher Günther Anders’ unachieved habilitation thesis Philosophische Untersuchungen über musikalische Situationen from the years 1930–31. [10] Anders focuses on the tempo r(e)ality of the “musical situation,” where “situation” is explicitly defined not as space. [11]

According to Anders, there may be spaces of musical production, but there is no “musical space,” not even a spatio-temporal unfolding. What he calls “the musical situation” is a form of existence of its own. [12] Even if music is derived from movement in space (ancient Greek mousiké ), it has “little to do with space as a system of coexistence [System des Nebeneinander, in Lessing’s sense].” [13] Music dispenses with spatial objectivity as such. It is only to the human hear—as a secondary quality—that sounding matter across spatial distance becomes decisive for the “musical” event to occur. [14]

That all changed, according to Anders, with radio transmission of music and the “acoustic stereoscope,” where loudspeakers are the technical location of acousmatic non-space. [15] Acousmatics refers to sound that one hears without seeing the—spatial—origin behind it. [16] In terms of cultural techniques, the production of music could always be located either on the composer’s desk (symbolically on paper), or in resonant architectures (like the orchestra). With phonography and radio, though, the arts of mousiké (dance, music, poetry) became dissociated from the “space” of production, turning ubiquitous.

According to Anders, “there is no abstract or empty acoustic space within which space-objects exist, but only spatial acoustic objects or “events” which, in themselves, have space-properties or structures.” [17] Once technically (re-)produced, the traditional musical space becomes a secondary phenomenon, while the actual production recedes into hard-wired circuitry as an operative diagram. The translation into conventional space only happens on the phenomenal user experience from the loudspeaker interface.

When music is realized “in the medium of tones,” [18] it loses its two-dimensional geometry of the score and does not simply enter into but actually itself creates a dynamic spatio-temporal dimension. Anders uses the term “Raum der Töne” in quotation marks. [19] Space is not the a priorifor music to unfold; it is rather closer to a mathematical topology in which even the problematic terminology of “cyberspace” becomes metaphorical. That makes the “musical situation” and its “Tonraum” structurally analogous to technological media in their processuality. [20] Like signal transduction in an electronic artifact, the “musical” tone can come to existence only in time [21] —or, rather, as time.

As captured by the name of the Berlin-based sound art gallery Errant Sound, sound is always already ephemeral, unfolding in space but as time. Its spatiotempor(e)al existence oscillates between the symbolic order of musical notation (harmonics as spatial co-existence of tones) and the physically actual sonic sequences (melodics). There is no “musical space” as long as music is primarily defined as time-based art. But music is itself an ordering of merely symbolic time signals in something like the way rhythmic notation becomes operative “algorhythm” in choreography and within digitally clocked computing. Is “space,” then, merely a condition of possibility (a priori) which music needs to unfold? It does not even require space when electro-magnetic waves as form of implicit sonicity propagate themselves via electro-magnetic processes. Space is not the Lacanian “real” which escapes in musical composition [22] but it is the tempor(e)al in which it unfolds as sound.

Sonified electric waves, or impulses, can fill bounded architectural space, while still being non-spatial as a technical media event (they exist as alternating voltages in electric circuits). So-called “drone music” actually amplifies the violence of the acoustical signal, which is mechanical air pressure against the eardrum, as distinct from electromagnetic waves—it is literally called Troubled Air in a track by the “drone doom” band Sunn O))). [23] Acoustic space is in this sense a reverberant time-function. There is an implicit sonicity in architectural space, a kind of sounding in latency, as if a Gothic cathedral was waiting for the organ to fill it with sound events and their reverberations. Composers of organ music actually create works with respect to the site-specific “echo response” and resonances given by the individual architecture. “Acoustic space” in McLuhan’s sense, [24] on the other hand, adds media time to the symbolic musical notation. Here, room-acoustic “communication” occurs between an organ tone and its architectural enclosure. The organ instrument itself, as a technical apparatus (a Heideggerean Gestell), acts as an organon of sounding matter. A nonhuman kind of “machine” is at work in room acoustics: acoustic resonance is “a subset of mechanical resonance.” [25]

Sonic Dramaturgies in Contemporary Theatre

Theatre and opera have for a long time only marginally focused on the sonic qualities of the material stage. But sound and stage are tightly intertwined, and media-archaeologically at that. As scientific target of research, the study of room acoustics arose from theatrical space directly, as exemplified by Prussian architect Carl Ferdinand Langhans’ investigation of the room acoustics of the ellipsoid Berlin National Theatre in early 19th century. [26] Langhans’ diagram of acoustic “rays” in theatre architecture has still been oriented at geometrical—that is, visual—perspective. Conversely, the visual pyramid that figured so prominently in Renaissance optics, may itself be deciphered as an analogy to the sonic traces of binaural room perception.

Proper acoustic space has been unveiled only later, especially in music halls and lecture theatres, as a function of convolutional time signals. In the early twentieth century, physicist Wallace Clement Sabine developed his acoustometric and mathematical equation to calculate “reverberation time,” that is the reverberant sound in a closed room that eventually decays into inaudibility. The actual “medium message” of such room acoustics are decay and delay: the temporal channel. The coefficients of such formula do not relate to architectural “space” as form anymore, but to different material factors like material absorption. [27] The Kantian a prioriof human perception is replaced by mathematical algebra.

With electronically produced sound, finally, a different, genuinely mediated theatre unfolds. True media theatre is not simply media-extended theatre: While the theatrical stage is still a cultural technique that enables body-related performances, true media theatre refers to the autonomization of the machine from within: the techno lógos of non-human “musical” intelligence as investigated by radicalmedia archaeology.

Media epistemology is concerned with “fields” rather than “spaces,” for it pays attention not only to acoustics but to electronically implicit sonicity. Field recording becomes active acoustic space through electro-magnetic waves that extend as speed rather than in spatial immediacy, while exerting a force on other charged particles present in the field. Electro-magnetic “radio” technologies do not simply make use of space but generate their field as channels of transmission themselves.

Sound may take place in space but not asspace. It rather defines its own field and “vector space” in the scientific and mathematical sense. While acoustics is dependent on physical propagation (the molecules of air), implicit sonicity (electromagnetic waves) unfold as a self-(re-)generating medium “channel.”

Soundscape compositions, once they become objectified in and as electronics, unfold in material and mathematical topologies rather than in abstract “space,” “giving rise to multifaceted situations that call for a redefinition of musical expertise.” [28] Even when sound itself becomes the object of an exhibition, it preserves its autopoietic agency. [29]

In media culture, “musical space” in the traditional architectural or environmental sense has become secondary. Sonic articulation primarily arises from within technical devices—so to speak, from the “backstage” (the technological “subface”) [30] behind the phenomenal interfaces that couple man and machine. Media theatre is not the technically augmented stage anymore, but the drama which “algorhythmically” [31] unfolds within computing, its inner-technical musicality.

Experiencing the Implicit Sonicity of Architectural Space

While Günther Anders’ philosophical inquiry into the non-space of the musical situation is predominantly phenomenological, media archaeological analysis looks at the spaces of musical production with respect to non-human agencies such as the electronic synthesizer.

Obviously, the human auditory apparatus remains central to the definition of room acoustics. [32] But there are as well electronic “ears,” such as sensors, antennas, microphones and electrosmog analysers (EM “sniffer”) which sonify electromagnetic impulses ranging from the Megahertz to the Gigahertz band.

Fig. 1 – Detektor with headphones (2011), constructed by Shintaro Miyazaki and Martin Howse. Courtesy of Shintaro Miyazaki.

It takes media-active sonification to make implicit non-spatial sonicity sound explicit. While any commercial radio receiver filters musical or oral content from the actual carrier waves for transmission, an electromagnetic wave sniffer directly resonates with waveforms that operate already in latency: not produced but rather revealed by technical sensors. “Acoustic space” turns out to be an inaudible technical infrastructure. It is ironic that inaudible signals enable mobile media like the smartphone for humans to tele-communicate audibly at all. [33]

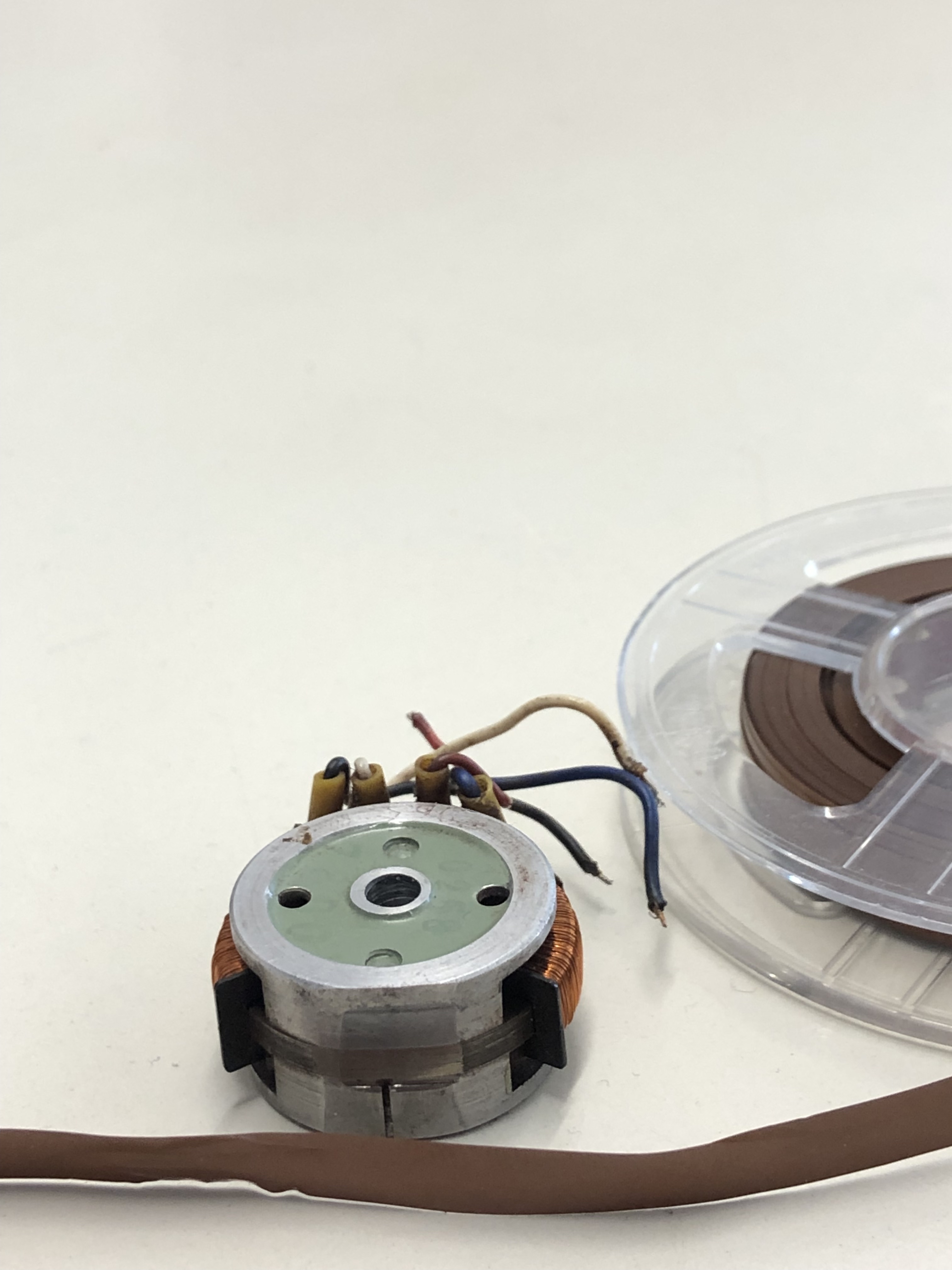

Implicit or explicit sound vibrations and pulses media-essentially differ from the symbolical order of musical (scores) or frozen sound archives (records). Such signal storage technologies as the magnetophone are like a technological “stage” which transforms (or freezes) sonic time into “space.” Its reciprocal function is inductive replay, thereby intertwining musical space and sonic time like a Moebius loop—literally, by means of the magnetic tape in reel-to-reel recording. A frequently quoted line from Richard Wagner’s Parsifal (Gurnemanz’s “Time turns into space…”) is media-archaeologically “grounded” in the form of a concrete technology of sound (re-)production. [34]

For his conceptual use of a room itself as a musical instrument, Alvin Lucier’s literally site-specific sound art installation I am sitting in a room (1969) depended on the memory capacity of an electro-acoustic device. His opening self-referential speech articulation was recorded on magnetic tape. This recording was then played back into the room and re-recorded again—an operation well known from echo delay in sound engineering and from exploring a closed architectural space by means of acoustic pulses which are folded upon themselves. When every subsequent signal is a replica of the same information delivered within a temporal interval, acoustic space itself dissolves into a function of temporal signal propagation. “In bounded spaces, reflected sound folding over on itself creates resonant nodes that cause spaces to act as filters, nonlinearly amplifying some frequencies and damping others. We never hear a sounding object by itself, always an assemblage of sounding object and resonant space.” [35]

As soon as a sonic signal is being recorded on a magnetic tape instead of the gramophone record, “it becomes a substance which is malleable and mutable and cuttable and reversible … The effect of tape was that it really put music in spatial dimension [italics added], making it possible to squeeze music, or expand it.” [36] What happens here is kind of a secondary, innertechnical micro-spatialization of sound that even extended to time-stretching. Music is not placed in a literal space in this case, but the very electronic act of coupling already amounts to the techno-musification of sound. The uniquely non-inscriptive qualities of the magnetic tape radically differs from phonographic recording. “The tangle of these foundational operations of tape sets the stage for their ... usage in the auditory culture.” [37]

Edgar Varèse once defined music as spatio-temporally “organized sound.” Once embodied in a medium like the magnetic tape, music radically turns into sonicity, dissolving into subnotational, sub-musical signals. The magnetophone “made one aware that there was an equivalence between space and time, because the tape you could see existed in space, whereas the sounds existed in time.” [38] But what really happens is the encounter between the copper coil and the magnetic tape when it passes the tape head. The reel-to-reel recorder itself becomes the actual “stage” in which the spatialization of the sonic time signal occurs.

Fig. 2 – Tape head from GDR tape recorder (Smaragd BG 20, 1962 / 63), plus magnetic tape.

“Musical space” is replaced by an electromagnetic “field.” The musical lógos technically interacts with—or rather, becomes—an electrophysical “event.” The singularity of electro-magnetic induction unfolds as a dynamic field, not as inert space. In the case of the tape recorder, the magnetic field induced by transduction of the vibrating voice or sound does not even touch the magnetizable plastic tape. Musical or “dramatic” (that is: time-ordered) space, and its temporality, converge in this implicitly sonic field. Storage media convert time signals into spatial records, and allow for the inverse operation at any arbitrary time-delayed moment. Traditionally, signal storage takes place in space, [39] be it the one-dimensional acoustic signal or the two-dimensional video image. Musical “space” can be only symbolically fixed on paper as score, but not as actual sound propagation. It can only be re-produced from the physicality of the phonograph, magnetic tape, or other signal recording media. Whenever Maria Callas’ voice emanates from a record player, critical attention should move from the implied opera scene to the actual “place” of musical production: to the studio and its pre-productions in the form of magnetic master tapes. A media-archaeological analysis of “musical production” is therefore focused on its microtechnical condition of possibility, below the acoustic performance of bodies or loudspeakers in space. The spatio-temporal production and signal processing of “sound” (as technically engineered music) no longer takes place exclusively in empirical “space,” that is, the space mapped by human hearing, but actually occurs in the technologies themselves: for the “ears of the machine.” [40] The inner-technological drama displaces, and even replaces the familiar theatrical scene to begin with. In a nonhuman sense of “deep listening,” [41] machine learning from artificial neural nets has already understood this musical technológos.

Part II. (Mis-)understanding “Acoustic Space”

“Acoustic space” as understood by McLuhan

Concerning the “production of musical space,” the question arises: where does music as sound, as opposed to symbolically organized score, actually “take place”? Sound unfolds not simply in space, but—spatio-tempor(e)ally— as time signal.

Musical production can be precisely located (on paper as score, in the studio as electronic music, in code as digital event), but once such a symbolic regime comes into the world as actual sound, it unfolds not simply “in space,” but spatio-temporally. Sound is chronotopic—a term borrowed from Mikhail Bakhtin, [42] but in a less narrative, more physical sense.

Where sound takes place, a different kind of spatiality arises: a function of dynamic signal propagation. “Acoustic space” becomes “musical” with rhythmical impulses as known from the “radio” world of electromagnetic waves. According to Marshall McLuhan, the media-tempor(e)al specificity of simultaneous electromagnetic wave communication is opposed to the linear design of spatial perspective that itself looks like a graphic “echo response.” Unlike the visually oriented “typographic,” geometricized space of the Gutenberg print era, “acoustic space” constitutes a different simultaneity.

McLuhan insists that electricity is of the same nature as the acoustic world in its ubiquitous being. His notion of “acoustic space” refers to the sphere of electromagnetic waves rather than to architectural sonic spheres but has been inspired by architectural thinking most literally. [43]

Sonic Spheres have been literally installed recently in New York to “experience music in all its dimensions.” The installation consisted of a loudspeaker-based, suspended concert hall for “immersive, 3-D sound and light explorations of music” [44] in the tradition of Karlheinz Stockhausen’s Kugelauditorium (built for the 1970 World Exposition in Osaka).

For the sake of the analysis of room acoustics, “space” becomes a function of the critical response time of sonic micro-signals: “Reverberation time, usually counted in seconds, is the duration between the emission of a sound and the decay in its intensity below the level of human perception, as it echoes in a room.” [45] Transcending human perception, the propagation of electro-magnetic “Hertzean” waves drastically escalates, since its temporal limit is speed of light itself. Since human auditory perception depends on frequency and mechanical force, no sense of space can be directly derived from EM waves. In that trans-phenomenal sense, McLuhan’s term “acoustic” space—somewhat confusingly—refers to the electrosphere.

Architectural Sonicity, and the Re-Production of “Musical space”

Site-specificity can be measured and explored through acoustical signals understood as spatial impulse responses and echoes folded upon each other. As an acoustical entity, a space is site-specific because of the unique acoustic features of each piece of architecture. Certain frequencies are emphasized or vanish as they resonate in space. Environmental or architectural space can thus be explored via media-active signal propagations which are not spatial in nature but time-critical. The engineering of room acoustics can even be extended to “auralization” as a reenactment of the past architectures.

At first glance,“historical” space cannot be experienced auditorily, since by definition sonic articulation perishes already in the moment it is being expressed. The ephemeral tone escapes spatial endurance.But medieval cathedrals—when still existing—elicit involuntary memories of past soundscapes, rather like time machines. [46] Digital Signal Processing and computer-based tools like wave field synthesis that media-archaeologically recapture Christiaan Huyghens’ approach to sound propagation even allow for the virtual (that is, computed) reconstruction of “historic” acoustic spaces.

The scientific investigation of room acoustics therefore does not only relate to the instrumental and mathematical calculations of reverberations in present lecture halls, theatres and opera houses, but also to the mapping of past sounding environments as well. While “acoustic space” can only unfold as presence, it can still be digitally sampled and re-produced by mapping it onto another space. Acoustic spaces from the past can media-archaeologically be simulated by computational “audification.”

An archaeology of the acoustical retrieves the memory of sound out of architectural spaces. But unlike historical research that depends on textual archives and the human imagination, it does so by media-active means. By mapping computer-simulated room acoustics on past architectures, for example, audio engineer Stefan Weinzierl achieved the retro-“auralization” of the Renaissance Teatro Olimpico in Vicenza. Whereas modern theatre acoustics privileges speech intelligibility, the virtual reconstruction of the Teatro Olimpico acoustic space suggests that it should rather be conceived as a musical performance space aimed at the revitalization of drama in antiquity. [47]

It is thereby possible to digitally render back the acoustics of past architectural spaces, such as the acoustics of ancient Greek theatres. But the Binaural Room Impulse Response (BRIR) operation which is “the key to auralization” is still somewhat anthropocentric in its simulation approach and different from actual machine “listening” which is based on objective measuring. It turns space itself into a media theatre to begin with. “The auralization technique has matured to such a level, that the human ear can hardly tell whether it is a simulation of not,” [48] reminding one of Maurice Blanchot’s acoustemic diagnosis of the sirens episode in Homer’s Odyssey: sweetest human singing arising from (visually obvious) monsters (the antique proxy of modern technologies). From a media-archaeological investigation of the acoustics of the Li Galli islands at the Italian Amalfi coast there has emerged indirect evidence that there are site-specific properties of that “acoustic space” which actually resonate with the enharmonic musical tuning of ancient Greek music. [49] Suddenly, a long-standing vocal myth is media-archaeologically revealed to be grounded in sonic evidence.

The Spatio-Temporal Message of Sound: Active Sonar

It has been the observation of acoustic delay (the echo effect) that once induced Aristotle to discover communication as a function of the “medium” channel in itself: the “in-between” (to metaxy). [50]

The implicit tempor(e)ality of “sonic space” becomes active with the sonar in submarine communication. It creates a sonic pulse called a “ping” and then waits for its reply from reflections. The time from emission of a pulse to the reception of its echo is measured by hydrophones or other sensors to calculate the distance; “space” thereby becomes a signal function of the temporal interval. The delay in echo acoustics induced Aristotle to focus on to metaxy, the channel as materially intervening: “ the between” in signal transmission (be it water, air, or a hypothetical medium called “ether”). To metaxy has been translated into the Latin mediumin medieval scholastic texts.

Submarine sonar (echo) location (“Ortung”) by “ping” not only identifies but actually generates a different kind of “space” function.

According to the Aristotelian definition of “time” found in book IV of his Physics, such signals are not in time, but they are actually timing themselves: they temporalize space. When it comes to echolocation, it is the temporal delay in sound propagation that itself becomes constitutive of calculated “space.”

Once sound is understood as a delayed presence[51], the Kantian a priori of a transcendent “time” and “space” [52] is replaced by the medium channel that is the engineering condition of telecommunication. More radically, the auditory scene morphs into implicit sonicity: from a passive echolocation that is still addressed to the human ear to media-active radar waves emission. With the onset of radar detection, the role of sound has finally been replaced by electricity as the “vehicle for the transmission of intelligence,” in Fredrick Hunt’s words (who himself once coined the very term “sonar”). [53] Where “the required information is embodied in the time-delay of the received waveform,” [54] McLuhan’s rather idiosyncratic notion of “acoustic space,” which assumes that the electric is always instantaneous, is media-scientifically corrected.

Lucier’s I am Sitting in a Room Redux

A media-archaeological understanding of “spaces of musical production” returns us to Alvin Lucier’s seminal media-artistic and (literally) site-specific performance I am Sitting in a Room (1969): a “spatial” modulation of sound-in-the-loop. The human performer, as in Samuel Beckett’s drama Krapp’s Last Tape, is coupled with a technical operator: the magnetic tape recorder. Not unlike the musical architecture of medieval cathedrals, the physical room of Lucier’s performance modulates the electro-acoustic signal by determining its early decay time and standard reverberation time. “Space” itself is (re-)produced by sound, becoming the message of ultra-, or even infra-sonic, timing signals and echolocation. [55]

Just like Lucier’s recursive room acoustics, the endless falling glissandi that were once computationally achieved by additive sound synthesis with the MUSIC-V program and punched cards by Jean-Claude Risset at Bell Telephone Laboratories phenomenologically resulted in a “spatial” impression by feeding the sonic information back into the loop with a delay of 250 ms. This creates an additional (additive) “beat” (“Schwebung”) with varying frequency [56] thereby generating sonic spatiality not with tones in symbolic “musical” notation, but as tonality from within the physically real space itself.

Lucier’s I am Sitting in a Room was first performed (or, rather, technically operated) at the Brandeis University Electronic Music Studio in 1969. Lucier’s voice was recorded via a microphone on tape, played back to the room and picked up again by the same microphone indefinitely, until the voice became anonymous and was eventually overshadowed by the resonant frequencies of the room—its acoustic unconscious—revealed by the articulations of the vocal utterance. But this is not human speech anymore (as with an echo) but the machinic voice (in both senses: recorded speech, and the dynamics of magnetophone electronics itself: technológos). [57] Such an operation decouples the acoustic real from the musical symbolic, propelling it into the realm of signal time domain.

The acoustic tape delay “mediates” between the present and the immediate past. The process of recording itself repeated 32 times. The agency that has actually “produced” this sonic media-drama has been the magnetic tape player “on stage,” that is, coupled with room acoustics. But with electronic (and finally digital) echo delay, the machine dispenses with "acoustic space" at all.

There have been countless reenactments of Lucier’s seminal installation in which the loop tape recorder / microphone is replaced by a programmed digital delay. But such a calculated delay erases the room-acoustic production of “musical space” via the implicit musicality of both hard- and software: the computational “algorhythm.” To summon Lucier’s sonic installation in digital reproduction online from the YouTube archive amounts to a spatialization, a freezing of resonant acoustic “drones” in alphanumerically addressable storage “locations.” Any visual projection on a screen turns acoustic space into a two-dimension image, leaving the “audience” with the geometrically constructed mere illusion of a spatial depth (Leon Battista Alberti’s finestra aperta).

Instead of digitally simulating Lucier’s original setting, it is media-archaeologically more radical to actually escalate Lucier’s concept into cyber-“space” itself. In an explicit allusion to IAm Sitting in a Room, but adapting its premise to the techno-logics of digital platforms, a video file has been uploaded on YouTube, then downloaded. This download has then been uploaded on YouTube again, until after 1000 iterations the data compressing algorithm for mp4 files reduces the original event to mere glitches: noisy artifacts as “a digital palimpsest.” [58]

The noisy artifacts of the video codecs of YouTube and the mp4 format are the counterparts of the entropic impact of the analog tape recorder in Lucier’s original. The analogy does not primarily concern media aesthetics but is symptomatic of the quality of digital data economy as revealed by technology-based artistic research. A video displays a shortened rendition of the I Am Sitting in s Video Room project, with highlights of the 1000 iterations of the original video experiment.

With this up- dating of Lucier’s classic sound installation in and ascyber-“space,” all musical, or verbal, room metaphors (which still made sense for “analogue” media sound art) dissolve—or are sublated—into digital signal processing and its techno-mathematical topologies. Even the final noise in Lucier’s version is replaced by well-calculated stochastics (or “pseudo-random” events). What used to be “musical space” in live acoustic signal propagation has become time-critical real-time computing.

* Originally delivered as a keynote lecture at the conference Spaces of Musical Production/Production of Musical Spaces , organized by the editorial team of Sound Stage Screenin collaboration with the Department of Cultural Heritage at the University of Milan (Triennale Milano, 3–5 November 2023).

[1] See Steve Goodman, Sonic Warfare : Sound, Affect, and the Ecology of Fear (Cambridge: MIT Press, 2009).

[2] This differentiation has been a topic of the conference Sound and Music in the Prism of Sound Studies, École des hautes études en sciences sociales, Paris, January 24–26, 2019.

[3] Shintaro Miyazaki, “Algorhythmics: Understanding Micro-Temporality in Computational Cultures,” Computational Culture, no. 2 (2012).

[4] For a generalized notion of “sonicity” see Wolfgang Ernst, Sonic Time Machines: Explicit Sound, Sirenic Voices, and Implicit Sonicity (Amsterdam: Amsterdam University Press, 2016), 21–34.

[5] Quoted in Otto E. Laske, Music, Memory, and Thought: Explorations in Cognitive Musicology (Ann Arbor: University Microfilm International, 1977), 5. See also Christian Kaden, “Was hat Musik mit Klang zu tun?!' Ideen zu einer Geschichte des Begriffs ‘Musik’ und zu einer musikalischen Begriffsgeschichte,” Archiv Für Begriffsgeschichte, no. 32 (1989): 34–75.

[6] Steven Feld, “Acoustemic Stratigraphies. Recent Work in Urban Phonography,” Sensate Journal, no. 1 (2011).

[7] R. Murray Schafer, The Tuning of the World(New York: Alfred A. Knopf, 1977).

[8] “Nam June Paik: I Expose the Music,” Art Culture Technology, accessed May 18, 2024.

[9] Konrad Zuse, “Calculating Space (Rechnender Raum),” in A Computational Universe: Understanding and Exploring Nature as Computation , ed. Hector Zenil (Singapore: World Scientific, 2013): 729–86.

[10] Günther Anders, “Philosophische Untersuchungen über musikalische Situationen,” in Musikphilosophische Schriften: Texte und Dokumente, ed. Reinhard Ellensohn, (Munich: C. H. Beck, 2017), 13–140.

[11] Anders, 90–4.

[12] Anders, 51.

[13] “So wenig hat sie doch mit dem Raume als System des Nebeneinander irgend etwas zu tun.” Günther Anders, “Spuk und Radio,” in Musikphilosophische Schriften , 248–50.

[14] Joseph L. Clarke, “Ear Building: Zuhören durch moderne Architektur,” in Listening / Hearing, ed. Carsten Seiffarth and Raoul Mörchen (Mainz: Schott, 2022), 235–251.

[15] “Radikal wird die der Musik zukommende Raumneutralität zerstört erst im Radio.” Anders “Spuk und Radio,” 249.

[16] Pierre Schaeffer, Treatise on Musical Objects: An Essay across Disciplines , trans. Christine North and John Dack (Oakland: University of California Press, 2017), 64.

[17] Günther Anders, “The Acoustic Stereoscope,” Philosophy and Phenomenological Research 10, no. 2 (1949): 257.

[18] “Im Medium des Tones,” Anders, “Philosophische Untersuchungen über musikalische Situationen,” 97. It harks back to Georg Wilhelm Friedrich Hegel, Vorlesungen über die Aesthetik, ed. H. G. Hotho, vol. 3 (Berlin, 1838), 127.

[19] Anders, “Philosophische Untersuchungen über musikalische Situationen,” 93.

[20] Anders, 94

[21] Anders, 98.

[22] See Sabine Sanio, “Ein Blick hinter den Vorhang? Zum Verhältnis von Musik und Medienästhetik,” in Akusmatik als Labor. Kunst – Kultur – Medien , ed. Sven Spieker and Mario Asef (Würzburg: Königshausen & Neumann, 2023), 42.

[23] Sunn O))), Metta, Benevolence BBC 6Music: Live on The Invitation of Mary Anne Hobbs , Southern Lord Recordings sunn 400, 2021, CD.

[24] See Edmund Carpenter and Marshall McLuhan, “Acoustic Space,” in Explorations in Communication: An Anthology, ed. Edmund Carpenter and Marshall McLuhan (Boston: Beacon Press, 1960), 67–9.

[25] Peter Price, Resonance: Philosophy for Sonic Art (New York: Atropos Press, 2011), 21.

[26] Carl Ferdinand Langhans, Ueber Theater oder Bemerkungen Über Katakustik in Beziehung auf Theater (Berlin: Gedruckt bei Gottfried Hayn, 1810).

[27] See Viktoria Tkaczyk, Thinking with Sound : A New Program in the Sciences and Humanities around 1900 (Chicago: University of Chicago Press, 2023), 124.

[28] Giorgio Biancorosso and Emilio Sala, “Editorial. Five Keywords and a Welcome,” Sound Stage Screen 1, no. 1 (2021): 5.

[29] See Sonja Grulke, Sound on Display: Klangartefakte in Ausstellungen (Marburg: Büchner, 2023).

[30] Frieder Nake, “Das doppelte Bild,” Bildwelten des Wissens 3, no. 3 (2005): 40–50.

[31] Miyazaki, “Algorhythmics.”

[33] Shintaro Miyazaki, “Urban Sounds Unheard-of: A Media Archaeology of Ubiquitous Infospheres,” Continuum 27, no. 4 (2013): 514–22; Oswald Berthold, EM-Sniffing (Humboldt Universität zu Berlin Seminar für Medienwissenschaft, 2009).

[34] On material concretizations of previously simply symbolic dramatic arts in Richard Wagner’s Musikdrama see Friedrich Kittler, “Weltatem: Über Wagners Medientechnologie,” in Diskursanalysen 1: Medien, ed. Friedrich A. Kittler, Manfred Schneider and Samuel Weber (Opladen: Westdeutscher Verlag, 1987): 94–107.

[35] Price, Resonance, 20.

[36] Brian Eno, “The Studio as Compositional Tool,” in Audio Culture: Readings in Modern Music , ed. Christoph Cox and Daniel Warner (New York: Continuum, 2004), 128. See also Maximilian Haberer, “Tape Matters. Studien zu Ästhetik, Materialität und Klangkonzepten des Tonbandes” (PhD diss., Heinrich Heine University Düsseldorf, 2023).

[37] Peter McMurray, “Once Upon Time: A Superficial History of Early Tape,” Twentieth-Century Music 14, no. 1 (2017): 31.

[38] Thom Holmes, Electronic and Experimental Music: Technology, Music, and Culture, 6th ed. (New York: Routledge, 2020), 107.

[39] “Alle Speicherung erfolgt im Raum.” Horst Völz, “Versuch einer systematischen und perspektivischen Analyse der Speicherung von Informationen,” Die Technik 20, no. 10 (1965): 651.

[40] Morten Riis, “Where are the Ears of the Machine? Towards a Sounding Micro-Temporal Object-Oriented Ontology,” Journal of Sonic Studies , no. 10 (October 2015).

[41] Pauline Oliveros, Deep Listening: A Composer’s Sound Practice (New York: iUniverse, 2005).

[42] See Mikhail M. Bakhtin, “Forms of Time and of the Chronotope in the Novel: Notes toward a Historical Poetics,” in The Dialogic Imagination: Four Essays , ed. Michael Holquist (Austin: University of Texas Press, 1981), 84–258.

[43] Carpenter and McLuhan, “Acoustic Space,” 67–9.

[44] See “Sonic Sphere,” The Shed website, accessed September 1, 2023.

[45] Alfredo Thiermann, “Radio as Architecture: Notes toward the Redefinition of the Berlin Walls,” in War Zones: gta papers 2 , ed. Samia Henni (Zurich: gta Verlag, 2019), 79n33.

[46] Barry Blesser and Linda-Ruth Salter, “Ancient Acoustic Spaces,” in The Sound Studies Reader, ed. Jonathan Sterne (London: Routledge, 2012), 195.

[47] Stefan Weinzierl, Paolo Sanvito, Frank Schultz and Clemens Büttner, “The Acoustics of Renaissance Theatres in Italy,” Acta Acustica united with Acustica volume 101, no. 3 (May/June 2015): 632–41.

[48] Jens Holger Rindel and Claus Lynge Christensen, “Room Acoustic Simulation and Auralization: How Close Can We Get to the Real Room?” (keynote lecture, The Eighth Western Pacific Acoustics Conference, Melbourne, 2–9 April 2003).

[49] Wolfgang Ernst, “Towards a Media-Archaeology of Sirenic Articulation: Listening with Media-Archaeological Ears,” The Nordic Journal of Aesthetics , no. 48 (2014): 7–17; for the scientific report, see Karl-Heinz Frommolt and Martin Carlé, “The Song of the Sirens,” The Nordic Journal of Aesthetics , no. 48 (2014): 18–33.

[50] Emmanuel Alloa, “Metaxu. Figures de la médialité chez Aristote,” Revue de Métaphysique et de Morale 106, no. 2 (2009): 247–62.

[51] Wolfgang Ernst, The Delayed Present: Media-Induced Interventions into Contempor(e)alities (Berlin: Sternberg Press, 2017).

[52] Immanuel Kant, Kritik der reinen Vernunft (Frankfurt: Suhrkamp, 1997), 45–96.

[53] Frederick V. Hunt, Electroacoustics: The Analysis of Transduction, and its Historical Background (Cambridge, MA: Harvard University Press, 1954), 2; see also Christoph Borbach, “Signal Propagation Delays: Eine Mediengeschichte der Operationalisierung von Signallaufzeiten. 1850–1950” (diss., University of Siegen, 2022).

[54] Philip M. Woodward, “Theory of Radar Information,” Transactions of the IRE Professional Group on Information Theory 1, no. 1 (1953): 108.

[55] Deborah Howard and Laura Moretti, Sound and Space in Renaissance Venice: Architecture, Music, Acoustics (New Haven, Conn.: Yale University Press, 2009).

[56] Liner notes in Werner Kaegi, Vom Sinuston zur elektronischen Musik , Der Elektroniker, 1971, LP.

[57] Hanjo Berressem, Eigenvalue: Contemplating Media in Art (New York: Bloomsbury, 2018).

[58] Christoph Borbach, email message to author July 24, 2023. See Ontologist, “VIDEO ROOM 1000 COMPLETE MIX - All 1000 videos seen in sequential order!,” YouTube video, uploaded on June 10, 2010.